WebXR, 2eme session, WebVR + WebAR:

A note of warning: Chromium-WebAR is not a production ready framework. It is solely a proposal to set up the foundations of WebAR. Consequently developers ARE expected to have issues and spark a debate.

Magic?

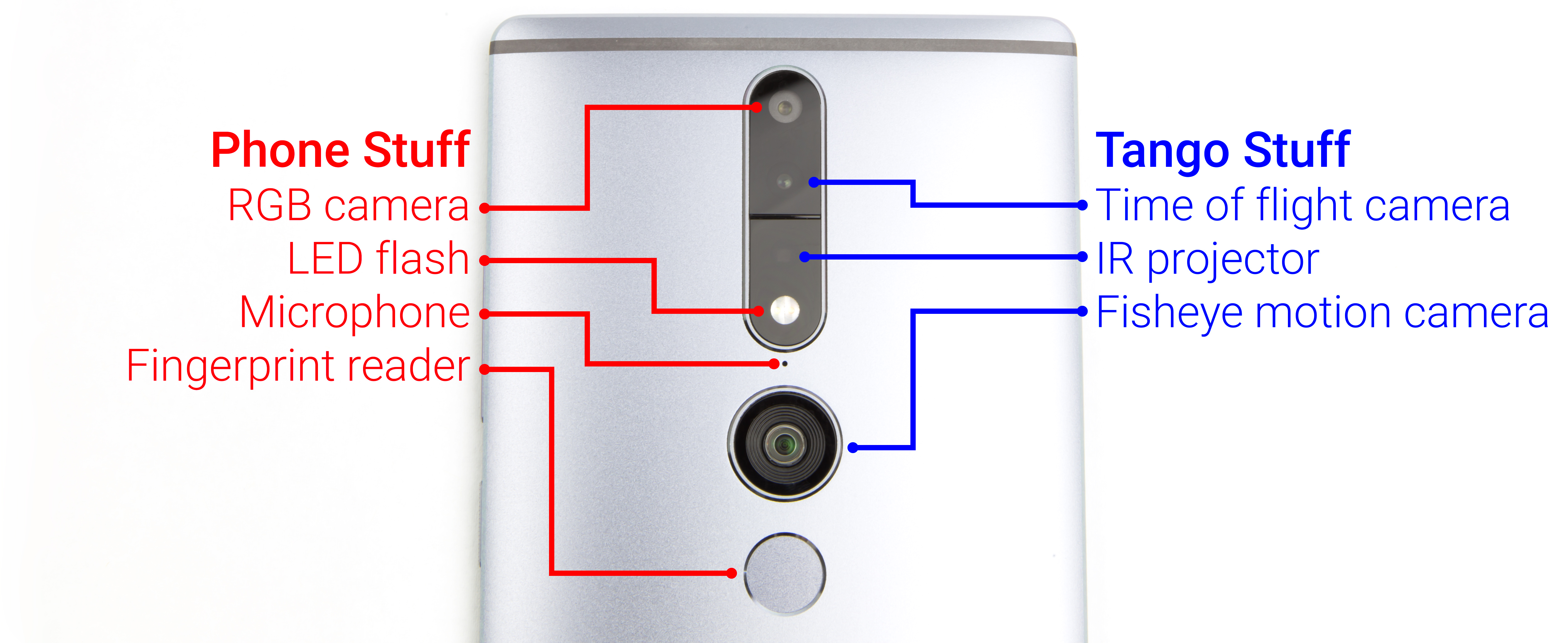

Step 1: have hardware to send and receive only your kind of light

Step 2: count how long it takes for your light to come back

Step 3: took little time? point is close. Took a long time? Point is far. You then get a cloud of points. Process it, do magic.

Step 4 (bonus): texture it thanks to your wide angle RGB camera.

Foundations

- Concepts of AR

- in practice here though you only have your position (motion tracking) relative to a low resolution model of the room (depth perception)

- WebVR API in particular navigator.getVRDisplays and VRPose

- WebAR API

- threejs

- THREE.WebAR

Testing

Examples to explore in that order (growing complexity):

- video to show content on "reality"

- picking to place it correctly

- occlusion to realistically show 3D content

Note that there are few more complex examples like object catalogue with measuring with WayFair Google I/O 2017 prototype or distance based point cloud coloring but they are not released yet.

Going further

- MeasureHandler.js to build a bounding box for content

- relying on more general threejs code

- Grid.js to display a grid over a flat surface

- Lines.js to display lines

- NumberText.js to display numbers

Note that this entire application, the WayFair prototype, is based on THREE.WebAR.positionAndRotateObject3DWithPickingPointAndPlaneInPointCloud to pick 4 points in space, the first 3 to define a place and the last 1 to define a volume. It might look like it's using more complex features but it's not hence the importance of this function in the API.

Functions of the API

- createVRSeeThroughCamera(vrDisplay, near, far) / createVRSeeThroughCameraMesh(vrDisplay, fallbackVideoPath) : to use at setup

- VRPointCloud(vrDisplay) getBufferGeometry() / update(updateBufferGeometry, pointsToSkip) : point cloud of the scene

- getPickingPointAndPlaneInPointCloud(pos.x, pos.y) : get a point and plane in the environment. Note that this is basically the most useful function. Namely that you point at your screen and you get pack a point in space with a flat surface that you can use to interact with. Most of the rest will be build on this specific functions. Most other functions (for now) are "just" a setup to make it possible and display the result.

- positionAndRotateObject3DWithPickingPointAndPlaneInPointCloud(pointAndPlane, object3d, scale) : to use to positiong an object

- resizeVRSeeThroughCamera(vrDisplay, camera) : to use on screen resize e.g. device rotate

- updateCameraMeshOrientation(vrDisplay, cameraMesh) : to use on update

- getIndexFromOrientation(orientation) : utility function

- getIndexFromScreenAndSeeThroughCameraOrientations(vrDisplay) : utility function

What's not there yet

- mesh reconstruction (but pointcloud, still requires quite a bit of processing power to go further in realtime)

- area learning (but discussion on enableADF)

Making

It's now your turn to play with all that. Assuming you did at least skim through everything, starting from the concept to the examples and finally the key functions

then

Note that the Chromium-WebAR browser includes a QR-code scanner, cf the top right button.

Consequently once you have remixed your project just scan your QR code to test your content!

Going MUCH further

This was a very short introduction to get the basic concepts and start a WebAR experience under 1h. Now there is so much more to do and learn. Check the references in order to see how all this is done, to find the relevant slack, etc.